One of the key aspects of Power BI is the Power BI Marketplace, an online store when you can pick up a wide range of visualisations developed by Microsoft or the wider community.

Scanning the Marketplace, there are a wide range of visualisations, ranging from the very useful, to niche uses and unfortunately a number of visualisations that should be considered as “chart junk” that don’t meet data visualisation best practices.

The visuals available within the Marketplace raises a number of key questions for a corporation:

- Why is there a need to go beyond the default visualisations available within the tool?

- How do users find the most suitable visual that meets their needs?

- How do you train a user on how to use that visual, and use it for the right purpose?

- How do you advise users on which visuals are the best ones and which ones to avoid?

- If a visual doesn’t meet requirements, how do end users proceed?

- A number of useful visuals require separate payment/license to get a full version, how does a corporation deal with this?

- What are the long term considerations of using visuals from the Marketplace? How will they be supported in the long term? What happens if they are neglected?

- What are the security considerations of visuals available from the Marketplace?

I will explore each of these questions in more detail, but I don’t have all the answers yet and would invite Microsoft, consultancies and the wider community to offer their views on these matters.

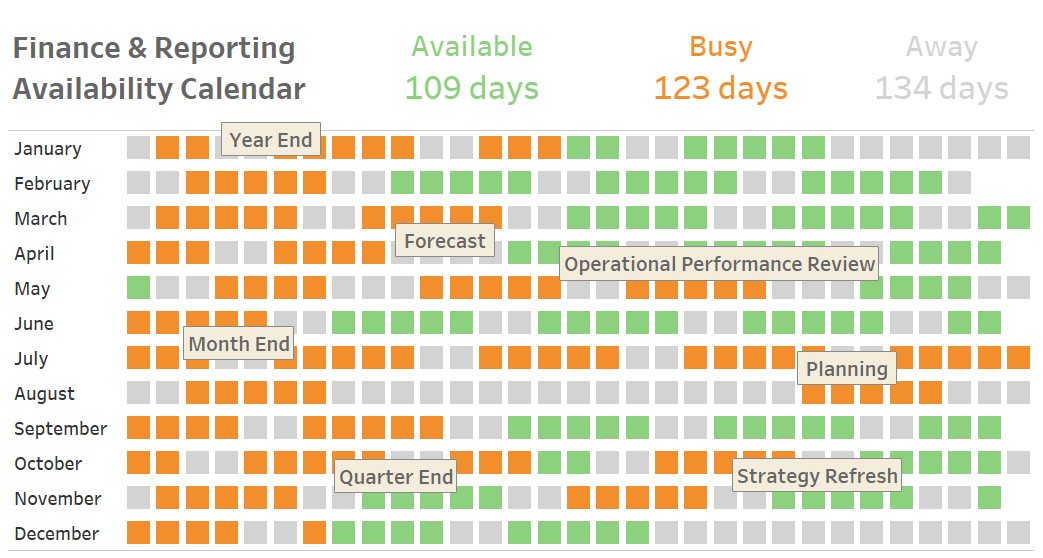

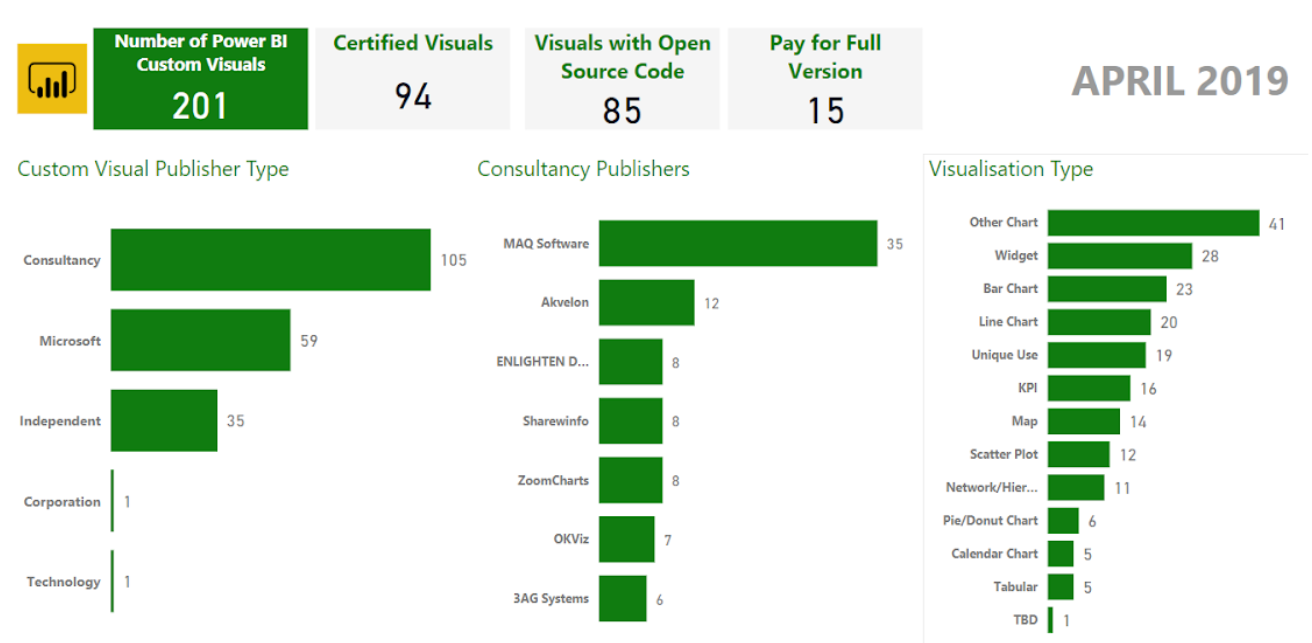

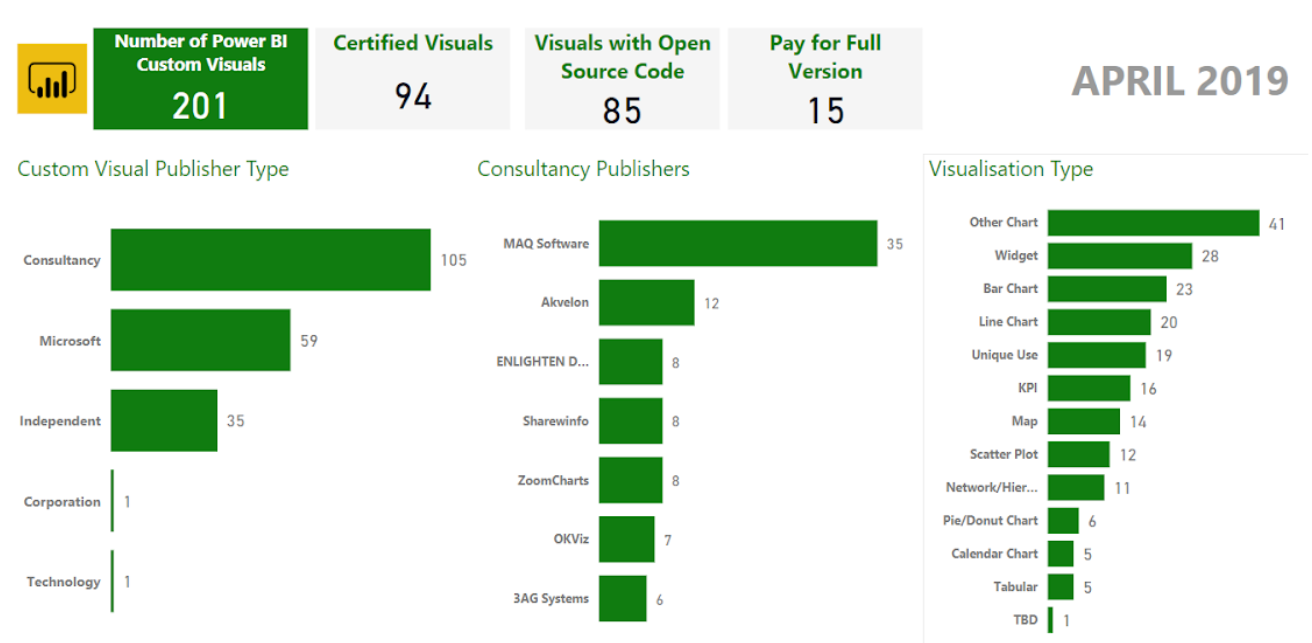

To help with my analysis I conducted some analysis on the visuals in the Marketplace, my categorisation is brief and open to interpretation/mistake but was good enough to help me with my assessment and give me a sense of what is in the Marketplace. Please see in the above dashboard the high level numbers. If Microsoft are able to make available a datasource of the available Custom Visuals that would be useful for next time!

Why is there a need to go beyond the default visualisations available within the tool?

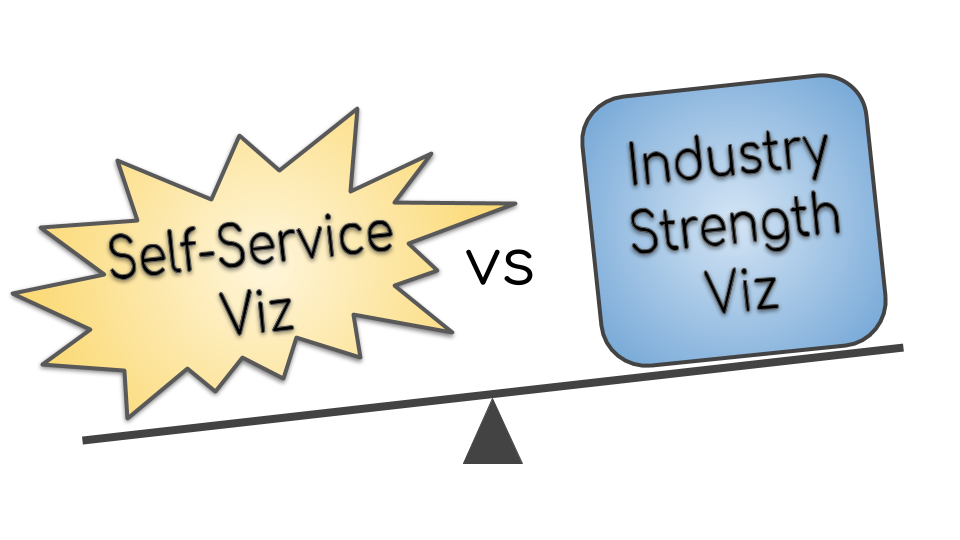

The paradigm of how Power BI works is that a visual comes with a preset design which you can configure as dictated by the design. Users sometimes find they are constrained by this preset design in achieving the visual they need to meet their use case, or the type of visual they need isn’t available and therefore they will look to the market place to find an enhanced visual or different kind of visual to meet their needs.

For example, I have a use case where I want to use a line chart over time (eg for each product price), and based on another category colour the lines (eg there are several brands each with several products). However, with the Line chart that comes with Power BI I can only manually manipulate the colours (fairly tedious, especially when lots of categories, and lots of visuals) I can’t control them with another dimension. That will become a problem over time as new products get added. Therefore, can I find a visual on the marketplace that meets this need?

How do users find the most suitable visual that meets their needs?

Users can search the marketplace and Microsoft have created a categorisation to try and aid users in this as well.

Currently there are over 200 visuals for Power BI in the Marketplace. You would expect this to continue to grow over time.

If you search for Line Chart for example, you currently get over 75 results. So as an end user, you are going to have to spend time reviewing the results to try and find a visual that meets your requirement. This does often result in having to try many different visuals to find the one that does the job (assuming there is one that does meet the requirements). Therefore, an end user would look for recommendations to save themselves time and effort. Microsoft have their Editors Picks and other categorisations to help end users, but I’m convinced more could be done to help users.

How do you advise users on which visuals are the best ones and which ones to avoid?

As a corporation, I would have a central team and/or SMEs review the Power BI Visuals available in the Market Place and provide recommendations on which ones to use so that end users are able to arrive at a solution more quickly.

It would be very useful for an organisation to be able to configure their recommended visuals through the Power BI interface, similar to how an organisation can make custom visuals available: https://docs.microsoft.com/en-us/power-bi/power-bi-custom-visuals-organization

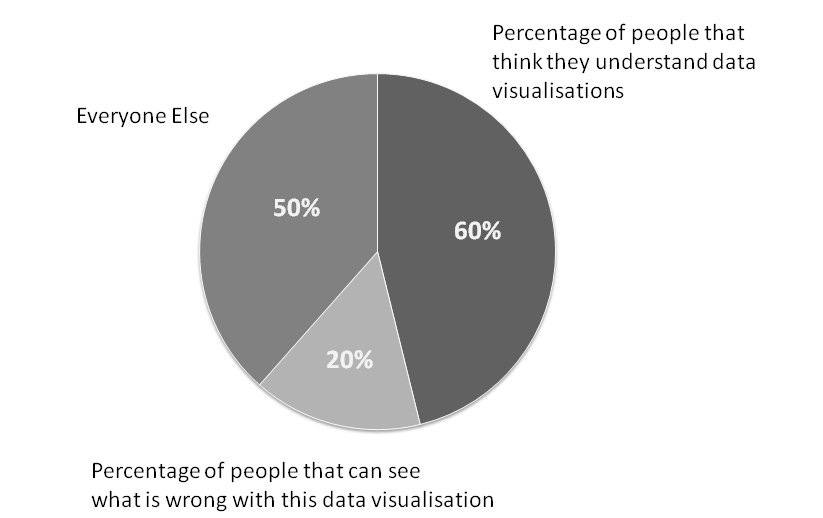

Data visualisation best practice is key to delivering successful dashboards, educating end users on this topic is no small undertaking. It would be prudent for organisations to advise users to avoid visuals that don’t meet best practices, or equally ones that are not well developed and have functional limitations (eg lack interactive filtering) or have significant bugs or don’t meet security requirements. Perhaps the option for an organisation to block such visuals would be a sensible approach.

How do you train a user on how to use that visual, and use it for the right purpose?

Each custom visual has a description, but further information about how to use it and when to use it is highly variable. Some are well documented and give videos and so forth, either on the store page or via links to comprehensive webpages. Others just have a brief description and a screenshot or two.

An option is to search for advice, for instance on the Pragmatic Works blog, Devin Knight has gone to a lot of effort to provide training for a good number of the custom visuals: http://blog.pragmaticworks.com/topic/power-bi-custom-visuals

Either way, there is a need to educate end users on visualisation best practices so they can choose the right visual or set of visuals for their use case. This is an in depth topic in itself, but every organisation should consider how to create this capability otherwise you end up with a mish-mash of reports with poor visual choice and all number of other issues that ultimately will lead the report not being used.

If a visual doesn’t meet requirements, how do end users proceed?

If the visual meets the majority of requirements, it would be pragmatic to first approach the publisher of that visual with feedback and ask them to enhance it. This of course may or may not happen and the time scale for any feedback to be incorporated could be highly variable.

The second approach is to create your own custom visual, potentially building on the existing one if it is open source code. https://docs.microsoft.com/en-us/power-bi/developer/custom-visual-develop-tutorial

However, this is going to beyond the ability of your average end user as it requires programming as well as a fair amount of time and effort. It would make sense to either outsource the requirement to a consultancy that build visuals or if available use an in-house team/subject matter expert who can be assigned to develop the required visual.

This obviously has a negative impact on delivery timescales and end users will have to either work within the limitations of the visuals that are available or put things on-hold until the required visual is developed.

A number of useful visuals require separate payment/license to get a full version, how does a corporation deal with this?

Within most corporations getting things through Procurement and Digital Security is no small undertaking.

Currently there are a number of visuals (I made it 15 at the time of writing) that only provide a functionally limited/watermarked version through the Marketplace and you have to go to an external site and make necessary payments to get the full version and license.

Having a brief scan of these sites, I think there are the following challenges when considering this:

- There are a wide variety of licensing arrangements proposed which may or may not work for your organisation depending on size and global locations.

- Some of these visuals have been developed by independent persons/small consultancies, so there is little “security” in the long term support of such visuals.

- It will likely take considerable time for an organisation to process the procurement of the visual from a new supplier and make the necessary payments. If the visual is being purchased from an independent or small consultancy the likelihood of this being approved will likely be quite low.

- It will also take time to perform the necessary security checks on such visuals. And the provider of the visual may not be willing to provide the source code to get it validated so you could end up at a bit of an impasse. There will also be the need to revalidate the code every time there is an update.

Obviously the developers of these custom visuals have put a considerable amount of time and effort into building them and have a business case to get payment.

I’m not sure what the right way forwards on this question is. Perhaps Microsoft can provide an ecosystem for appropriate payment that would be more acceptable for corporations to process. Microsoft have recently implemented an approach to certify and then flag the custom visual as requiring further purchase https://powerbi.microsoft.com/en-us/blog/new-monetization-option-for-power-bi-custom-visuals-through-appsource/ but as far as I can tell you still need to engage directly with the 3rd party for the full license key so that doesn’t solve the procument issues, but does cover the security issues as it is certified and therefore checked by Microsoft.

Perhaps Microsoft should come to some arrangement to provide such visuals as part of licensing arrangements with organisations and then manage payment and set-up with these third party providers themselves?

Organisations also have the risk that end users circumnavigate procurement and digital security processes and purchase directly from the developers. I would hope that the Power BI service can be monitored for use of non-approved custom visuals, so this is something that should be considered by an organisation.

Not that I’ve done an extensive search, but I can also see that there are suppliers who don’t offer their custom visuals through the Marketplace at all, eg Zebra BI https://zebrabi.com/power-bi-custom-visuals/ and therefore an organisation would need to seek them out and ascertain for themselves if there is value in what they provide given any monetary, commercial and security considerations.

What are the long term considerations of using visuals from the Marketplace? How will they be supported in the long term? What happens if they are neglected?

It would be reasonable to assume that there will be continued growth in the amount of visuals available in the Marketplace, to the point where there easily could be several hundred or more. This would exacerbate the challenge of finding the right visual for your use case.

As Microsoft updates Power BI over the coming months and years, there is a risk that a custom visual stops working (in part or entirety) due to updates in the API. If the developer of the visual is no longer able or willing to maintain this visual what happens? There is also the risk that new key Power BI functionality is not incorporated into the visual, and therefore it becomes obsolete.

Will Microsoft “cleanse” the Marketplace of such visuals? How will the impact to end users be managed if they have used one of these legacy visuals that is now no longer supported? Will Microsoft pick up any of these legacy visuals and provide continued support themselves to mitigate this risk?

Even briefly reading the reviews and community articles, it comes across that some of the current set of visuals available in the Marketplace have bugs or already lack key features that users come to expect. I don’t have time for an in-depth assessment to verify, but it does raise concerns on the quality of custom visuals available in the Marketplace.

What are the security considerations of visuals available from the Marketplace?

Anyone can submit a visual to the Marketplace. Microsoft also allow developers to get their visual certified provided it meets specific criteria

https://docs.microsoft.com/en-us/power-bi/power-bi-custom-visuals-certified

https://docs.microsoft.com/en-us/power-bi/power-bi-custom-visuals-certified?#certification-requirements

Here is the list of Power BI Custom Visuals that have been certificated. Note that Microsoft can remove this certification at any time.

https://docs.microsoft.com/en-us/power-bi/power-bi-custom-visuals-certified#list-of-custom-visuals-that-have-been-certified

There is a risk for any custom visual that has not been certified by Microsoft that it could send data externally. Here a few blog posts on the subject.

https://www.efexcon.com/blog/are-all-custom-visuals-in-power-bi-secure

https://datasavvy.me/2019/02/28/what-data-is-being-sent-externally-by-power-bi-visuals/

Therefore, as a corporation I would want to block the use of any non-certified custom visuals unless they have been reviewed by Digital Security and approved. These reviews may not be possible if the custom visual is not an open source visual unless the third party is willing to share their code.

If I’m running the Power BI Service Is there a way to ensure that custom visuals don’t connect to the internet without approval by an organisation? How does a corporation manage this risk effectively, the current situation suggests a number of processes would need to be put in place.

Microsoft also suggest that the Certification could be removed at any point, so organisations that rely on it would also have to consider this.

Conclusions

The current situation poses a number of key questions and I recommend organisations carefully consider the impact of the Power BI Marketplace now and for the future to ensure they don’t run into some of the challenges outlined such as insecure visualisations and end users being overwhelmed by what to do. My key recommendations for an organisation are:

- Produce and maintain a curated list of which custom visuals end users should consider using first.

- Block custom visuals that aren’t certified to ensure security and any other custom visuals that the organisation do not want end users to consider using.

- Put in place a process for a team/3rd party to develop custom visuals on behalf of end users if there is a use case to do so.

- Consider how to process procurement of custom visuals that require payment if there is a use case to do so.

- As part of a wider consideration, grow end user capability in terms of data visualisation best practices so that end users use the right custom visual for their use case.

I welcome any feedback and input into this topic, please comment or get in touch.